Finding the needle in your haystack of event data

Uplevel your user behavior analytics by building context and questioning assumptions

Last week, I explored why user behavior insights are so elusive, despite the prevalence of user journey visualizations in Product Analytics platforms. I articulated three challenges blocking that deep insight:

The most insightful user paths aren’t the most common, so we need another way to identify that signal amidst all the noise.

The most meaningful events aren’t tracked, because they sit on the seams between features or product teams.

The most common visualizations aren’t designed to let the key insights pop, making them harder to discover.

To tackle these challenges, my past teams have relied on two key strategies: Building context to surface the highest-signal user paths, and questioning assumptions baked in to user behavior analytics tools. Today, I’ll share some detail on how these strategies have led to better outcomes for my teams. Let’s dive in!

Building context: Partnering with UX Research to hone your focus

When I hear that a Product Analytics team is struggling to show value from their user behavior analytics, there are two questions I start with: Does your company have a UX Research function? And, have they completed any benchmark studies?

If you're not familiar with UX benchmark studies, leaning on prior familiarity with benchmark metrics in general gets you most of the way there.1 A UX benchmark study is focused on establishing a stable list of core product tasks, and evaluating a set of metrics against those tasks based on participants' performance. Common metrics include task success, time on task, and some notion of satisfaction (UXR has a few common instruments they employ). The benchmarking is typically internal; that is, benchmark studies are run on a regular cadence, and compared with the initial, or baseline, results.

Given the task focus, you might already see some overlap with your user behavior analysis efforts, but let’s be explicit at what you should seek to understand. A UX Researcher who has run a benchmark study on a product has, at their disposal:

A list of the product's Pri1 tasks, from the user's perspective.

Speaking from experience, this is a high-value asset that is non-trivial to produce, since Product and Engineering can get hyper-focused on the new features of the moment. This list can be a useful resource to recenter on a holistic view of the product, and can be helpful in filtering Product Analytics visualizations of user behavior.Start, end, and intermediate points for each task.

In building the test, the UX Researcher will plan where in the product the user should start for a given task, and what constitutes success. Leverage this to check the events you’re tracking for completeness.A read on which tasks are more challenging, along with common pitfalls.

This is critical insight on the nature of challenges users face. With this, you can build a mental model for what you're looking for in your user behavior data, and whether the visualizations at your disposal are suitable for finding those insights.

I hear you asking: What if there isn’t UX Research team at my company? Or maybe you have UXR, but they’re more focused on specific feature testing than benchmarking.2 If you find yourself in this position, you should still lead the effort to build out the context you need!

Build a list of 2-5 of the most important tasks in your product, from the user's perspective.3

Review that list with Product and Engineering stakeholders. Build consensus on the top 2-5 Pri1 tasks in your product.

Step through those tasks, while trying to adopt a user's mindset. Where might they be led astray? Where are there multiple paths to success? If you can identify your own usage data, this can help you validate the presence, or absence, of key telemetry events based on your mock usage.

With this added context, we're ready to revisit our user behavior analysis, with an eye toward the assumptions that underlie common tools.

I'm on a mission to improve corporate data culture for data professionals of all stripes, in Data Science, UX Research, Analytics, and beyond. If you’d like to join me, you can help by sharing this newsletter with the data culture drivers in your network.

Questioning assumptions: Rethinking exploratory user behavior analysis

Think back now to the funnel, task flow, and Sankey diagrams I showed last week (or, whatever tools you've used in your own user behavior analyses). What are the key assumptions behind these visualizations? Here's a few that I commonly call out:

All movement happens left-to-right (or top-to-bottom, for a traditional funnel).

We consider each user session for the visualization, with session events ordered by when they occurred (timestamp).

Each event is optional. We can choose to ignore events before a particular event (start), after a particular event (end), or remove a particular event from the visualization entirely (irrelevant).

I tend to question the first assumption. Especially as a product's complexity grows, a user's movement through that product is not ever-forward. Users may repeat steps. They may explore broadly before committing to go deep on a task. They may get so confused that they start over. At best, common visualizations represent these scenarios the same: As a longer path. Some visualizations may gloss over these scenarios all together.

With a clearer understanding of what meaningful user behavior looks like, it’s time to rethink how we explore that behavior. Let’s dive into a a real-world example from one of my teams to spark new ideas for approaching these explorations.

Rethinking the Sankey diagram

To set the stage: Our product was newer on the market. We were confident in the value it provided, but as with anything new, there was a learning curve involved in getting the most out of the product. So we were especially interested to know situations where users "started over" — going multiple steps down a task, only to backtrack, or switch gears to a different task all together.

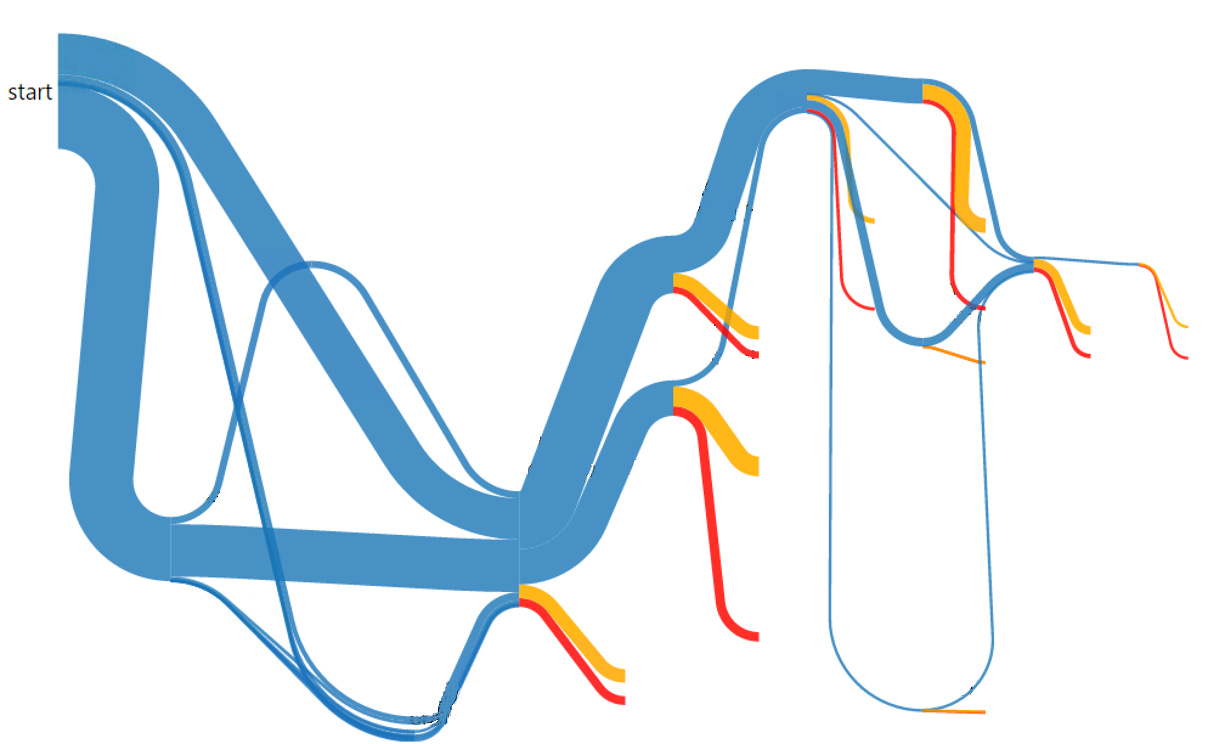

My Data Science team discussed different visualizations that may be appropriate to get a visual representation of user behavior. One colleague took inspiration from the Sankey diagram, and built a custom version for the product:

He introduced a start node at the beginning, which connected to 2-5 starting points for the core tasks in the product. The start node itself was not an event.

He considered pairs of events that made material progress toward task completion, based on context from a UX benchmark study. Users who followed these progressions are represented in blue.

Any event progression (A->B) that wasn't in his list of event pairings was considered starting over, represented in yellow. Users who went down the yellow path returned to start.4

Similar to most Sankey diagrams, red paths represented users whose session ended at that step.

I get that an unlabeled Sankey diagram for an unknown product can be hard to parse, but despite that you can still see where “start overs” are showing up in the flow. Take a look at the middle of that image. See the thin blue line arcing up, above the much larger yellow and red lines? That step clearly had a massive issue moving users through their task. This visualization put a spotlight on it, spurring the team to action. That's just one example of many from how this visualization was applied: In my 18 years supporting Product teams, I've never seen a team of PMs more engaged with data team asset.

I don't mean to suggest this exact visualization is a gold standard for user behavior analysis. This is one example of a quick-and-dirty exploration that provided a lot of value — for that particular product, at that particular moment in its history. The right data asset to provide that level of deep insight will look different in other contexts,5 but it always starts with rethinking the assumptions behind the common tools for user behavior analysis.

The next time you find yourself spinning your wheels on user behavior analysis, take a step back. Ground your work in the tasks that truly matter — whether by partnering with UX Research or defining them yourself. Then, challenge the built-in assumptions of your analytics tools. These two shifts can turn your noisy event stream into a wellspring of meaningful insights.

For those not familiar with benchmarking at all, it's essentially a structured approach of comparing your metrics against internal, or industry targets — the benchmarks — to provide additional context on those metrics. In the automotive industry, for example, benchmarking is used extensively to understand their competitive position in the industry, and how new vehicle models will perform in the market.

This isn't uncommon. Done correctly, benchmarks can be expensive and time-intensive, which doesn’t always align with a UXR team’s budget and objectives.

Anticipating a commonly-proposed Pri1 task: Sign up, while important for new user adoption, is not why users are interested in your product. By design, sign up flows should be linear and very simple. You can use traditional funnel and task flow diagrams to optimize them. So if I've enticed you to build this task list, go deeper. What did users actually come to your product to do?

It's like Chutes and Ladders for grown-ups, complete with the frustration of landing on a chute and having to make your way through that part of the board product yet again.

Here’s another example to keep the wheels turning, from YouTube in 2010. In this context, repeated actions aren't a bad thing — YouTube wants you to watch one video after another. By using a Sunburst diagram, Kerry Rodden helped the important information pop. Track the decay patterns as you move from the center outwards. A slow decay means strong, continued engagement, whereas a fast decay means a quick path out of the product.